Running systems library as Cloud Function.

I was chatting with my friend Bobby Powers, and he mentioned a systems dynamics app he’s been hacking on. That conversation inspired me to want to finish a project I’ve been neglecting, which is explosing systems as an HTTP endpoint running within a Google Cloud Function.

The rough goals of this exercise were: (1) expose systems via an HTTP API,

(2) run it as a function, aka serverless, to minimize maintenance and cost,

(3) the development workflow should be very simple since I’ll otherwise forget how it works when I periodically forget about it,

(4) it should be extensible for me to add a JavaScript GUI that calls into the API at some later point.

These goals have been accomplished, although it was a bit trickier than I expected.

I already had the Google Cloud SDK installed, so I skipped over that bit. Next I moved on to create a new Git repository:

cd sys-app

git init

Initially it was just three files. The first was an HTTP function at sys-app/functions/run-model/main.py:

def hello_get(request):

return 'Hello World!'

The second was in that same directory at sys-app/functions/run-model/requirements.txt and

specified the only Python library dependency:

systems==0.1.0

The final file was a build configuration for Cloud Build:

steps:

- name: 'gcr.io/cloud-builders/gcloud'

args: ['functions',

'deploy',

'run_model',

'--trigger-http',

'--runtime',

'python37'

]

dir: 'functions/run-model'

Then created a new private repository on Github and sync the local repo up:

git remote add origin git@github.com:lethain/sys-app.git

git push -u origin master

Then I updated Cloud Source Repositories on GCP to mirror over lethain/sys-app.

I could have also just connected to the repository directly on Github instead of mirroring it over,

but for whatever reason I sort of prefer mirroring over and then getting to operate on it directly

within Cloud Source as it’s a bit more decoupled.

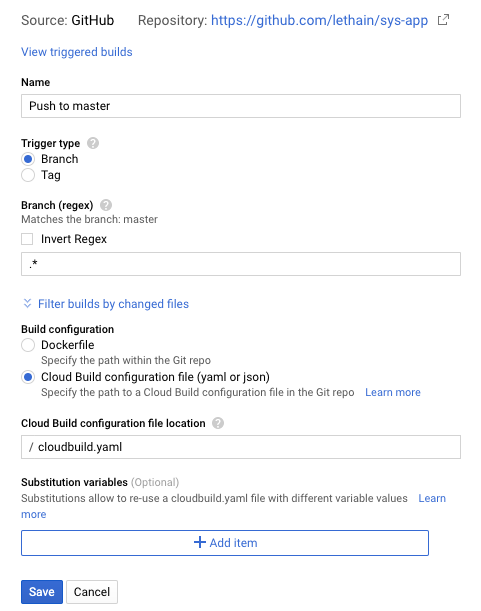

Started following the steps to do automatic builds.

First enabled Cloud Build, then created a build trigger firing on all deploys on sys-app to master branch.

As a caveat, I know it says Github is the source, but the source I selected was the Cloud Source mirror. As best I can tell, this is just a weird UI bug.

The penultimate step was granting Cloud Function deploy permissions to Cloud Builder as its documented here. I initially missed this step, and honestly found it a bit confusing even following the directions word for word, but I eventually got it (I debugged by continuing on with next steps and futzed around until it all worked).

At this point, I committed another change to the sys-app repository and deployed it, and viola,

the build trigger fired, the function was created, and it all worked.

In full transparency, I got stuck here for some time, with everything seeming to work, with new versions of the function getting created on each deploy, but the code it ran never getting updated at all. I had created that function through the UI, and there just wasn’t much useful context in the logs to say why it wasn’t updating, so I went ahead and deleted it and relied on the cloud build configuration to create and update a new function, at which point it worked properly.

I’m able to verify the function through the gcloud CLI:

gcloud functions call run_model \

--data '{"definition": "a > b @ 1"}' \

--project=PROJECT

Alternatively, I can also call it over HTTP in Python:

import requests

definition = """[a] > b @ 5

b > c @ 4"""

resp = requests.post('https://$MY-FUNCTION-URL',

json={'definition': definition})

print(resp.content.decode("utf-8"))

I’m now able to modify sys-app, push the new commit, and the function updates automatically behind the scenes,

which was pretty much the original goal.

Unlike with my GKE experiments, I was able to get this deployment flow without creating any sort

of services to handle scheduling upgrades after builds completed or whatnot, which is quite nice.

(Looking at the kubectl cloud builder,

I bet I could figure out a way to do continuous deployments to k8s without a service monitoring the build pub-sub queue,

I’m not sure if this is new functionality or if I just missed it last time.)

If I were going to be developing with this workflow frequently,

my next step would be to figure out how to run tests in a blocking fashion on each build, but it doesn’t

seem like there is a good way to do that for Python (they’re missing pip as a cloud-builder,

so I think I’d need to setup a Dockerfile to use for testing, which is totally fine, but not something I’m particularly excited for).

Altogether, there is a great user experience just over the hill from what GCP is offering.

Imagine how delightful this user experience would be if after I created a new Cloud Function I could select

a checkbox and it would automatically deploy each time I changed it in the Cloud Source repository? Imagine further yet

if I could include a test.py that would automatically run before attempting to deploy an update?

You absolutely can build all of this yourself, but why should each user need to add this glue? This is why platforms like netlify are cropping up despite the cloud vendors having all the right components underneath. This evolution reminds me a bit of AWS’ attempt to move downstream into the personal VM market with Amazon Lightsail, executing a few years too late on what other hosting providers like Slicehost and now Digital Ocean have realized some users want.

A good reminder for all of us that we should take a few hours each quarter and integrate our products from scratch and see what the rough edges are.