The physics of Cloud expansion.

Your first puzzle in a new company is debugging which buzzy startup era they were founded in. Ruby on Rails? Some MongoDB or Riak? Ah, Clojure, that was an exciting time. The tech stack is even a strong indicator at where the early engineers previously worked.

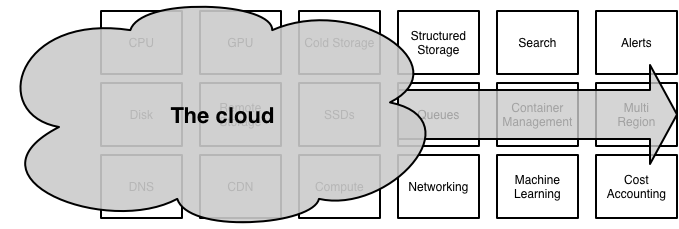

With many new technologies being released every year, it should get harder to place companies into their era, but I find it’s getting much easier. The staccato drumbeat of JavaScript frameworks has helped, but lately the best indicator has been which components are managed internally, and which have been moved to the cloud. Lately, the move from VMs to containers has fingerprinted companies as surely as the previous move from servers to VMs.

It’s particularly interesting to think about how this trend is redefining the role of infrastructure engineering at companies that build on the cloud. How will the cloud’s steady advance shape our future work, and what can we do today to prepare for those changes?

Principles of Cloud expansion

The cloud is progressing according to these principles:

- Cloud providers will launch an accelerating number of exploratory products to test for value and product-market fit. These exploratory solutions require architectural decisions that new companies adopt easily, but are challenging to retrofit. ECS is a good example from several years ago of exploring the container opportunity, which had a rocky value proposition at launch, and has subsequently come into its own. AWS Lambda, Azure Functions and Google Functions are a great example of a solution in this space today.

- Most of these exploratory solutions will eventually convert into something sufficiently commoditized and usable that even many existing companies are able to adopt it. Today, the time to evolve from exploratory to mature is about three years.

- As the cloud providers themselves grow, they’ll find it increasingly difficult to prioritize small opportunities (including, some opportunities they currently support becoming too small to justify supporting).

- Over time, cloud providers will solve any generalizable problem, even if it’s incredibly hard, but won’t solve any specialized problems, even if they’re very easy. The positive example of this is AWS KMS providing hardware security modules, and the negative example is Lambda still having very constrained language support. Caveat: they’re quite willing to do custom work for large users, so this principle gets blurred as your cloud spend goes up.

- As cloud adoption grows, increasingly the majority of growth opportunities for cloud providers will come from convincing companies to move off their existing cloud provider. This has rewritten their priorities in a jarring way:

- reduce migration cost, particularly through common interfaces like the Kubernetes APIs, Envoy, etc,

- provide best value, especially in reliability, security, latency and cost,

- provide differentiated capabilities to inspire internal advocates for the move, such as GCP’s machine learning strategy with Beam.

Competitive advantages of internal infrastructure

While cloud providers have many advantages over internal infrastructure providers, nonetheless there are also a number of structural advantages that come from being responsible for just one company’s specific infrastructure needs:

- We don’t require the economies of scale that cloud providers do; we have the freedom to do custom and specialized work. Azure Functions, AWS Lambda and Google Function are a great example: they’re only able to offer implementations for a few languages given the degree of language and language sandboxing support they require. We could support any language our company wants.

- We have the opportunity to understand our business domain deeply. We can target the specific compliance regimes, threat models, testing and other aspects that are unique to our company’s business.

- We have direct and frequent access to our users. We can spend a lot more time understanding and specializing our work to support the smaller, more specific set of folks who use it.

Where should we keep investing?

Building on the competitive advantages of the cloud and internal providers, let’s explore some common areas of infrastructure. For each, the question is whether they’re still worth investing in, and if not, how we can start disengaging.

Network

Networking is so complex that it seemed unlikely the cloud could reproduce its functionality. This has largely been true (multicast on the cloud, anyone?), but it’s not working out quite how I suspect networking equipment providers hoped: requirements that were historically network layer concerns are increasingly moving up the stack into the application layer (e.g. Envoy offering a “service discovery service” in addition to DNS). It’s already possible to build and maintain sophisticated infrastructure with little understanding of the networking beneath it.

The cloud’s rigid inflexibility around networking configuration (particularly in the early cloud, although slightly less true as AWS VPC, GCP VPC, and similar products have expanded their capabilities) has contributed to this shift, requiring infrastructure providers to innovate around the new networking constraints, and reducing network-configuration dependencies.

Overall, I anticipate the cloud to absorb most network functionality over the next several years:

- Clouds have already won on core network quality, especially GCP, with fast, reliable connectivity with more ingress points that most companies can reasonably maintain, and faster response to routing problems than you’re likely to be able to provide.

- Basic CDN functionality is and will continue to be simpler and cheaper on the cloud than doing it yourself and cheaper than most dedicated CDN providers.

- Clouds are racing to add edge compute capacities, like Lambda @ Edge, the convenience of which mean that dedicated edge compute providers like Fastly and Cloudflare Workers will have to provide significantly more value or convenience to convince folks to remain with them, which seems fairly unlikely.

- DDoS mitigation, long the specialty of companies like Prolexic appears situated to move to cloud products like Alibaba Cloud’s Anti-DDoS, AWS Shield, Azure’s DDoS protection. The clouds already need that capacity to mitigate DDoS attack against their own assets, meaning the incremental cost for them to offer these products is very low relative to a dedicated companies that have to maintain dedicated fleets for mitigation.

- Because networking is increasingly a commodity rather than a differentiator for the clouds (and increasingly their competition is each other, and not a customer’s existing physical infrastructure), they’ll provide cloud-agnostic interfaces and tooling like CNI to make it easier to migrate across networking setups. I expect the pattern demonstrated by Kubernetes to land on a common orchestration interface to repeat here, perhaps something like Itsio. *Even with your own hardware, the clouds have a unique economy of scale to push on the boundaries of performance on the basics: e.g. Azure using FPGA to drive 30Gbps between VMs on TCP/IP between VMs isn’t something you’re likely to do.

Internal infrastructure providers can still provide value, particularly by grappling with the less scalable complexities that clouds shirk:

- Writing and maintaining health checks will remain sufficiently complex and specific to individual applications to resist commoditization by cloud offerings.

- The sheer complexity of user identity, service identity and the surrounding problem space (authn, authz, SSO) have continued to resist wholesale movement to the cloud, and I suspect this will continue to be true for several years. AWS IAM and GCP IAM will continue to edge closer, but will be constrained by opposing needs for infinite configurability for enterprise versus simplicity for smaller companies.

- Multi-region redundancy will continue to require application layer changes, and latency across regions will continue to be constrained by the speed of light.

- Some enterprise software developed in previous eras, like Vertica, requires network tuning to operate as designed. It will be an interesting race to see if clouds provide the ability to perform this tuning or if sub-optimal performance on existing clouds kills off these products before that happens.

Overall, networking, routing and related concerns will be significantly commoditized over the next few years.

Compute

Compute can loosely be broken into orchestration, build and deployment. While every company must provide some solution to these problems, most small companies have simple requirements and their needs have been met by opinionated PaaS providers like Heroku for quite some time. Large companies have continue to grow out of most PaaS providers, seeking increased configuration, lower costs, and enough portability to prevent vendor lock-in.

Cloud providers have reached sufficient scale to make optimizations few companies can make, and their buying scale allows them to own the SSD market. Recently, Amazon announced AWS EKS, joining Azure AKS and GKE to become the third major cloud provider to commit to supporting Kubernetes as an interface, and providing a unified compute interface to the cloud. This unified interface will reduce cross-cloud migration costs, and signal that cloud providers view compute as a commodity where they’ll compete on value. This reduction to the interface is especially obvious in projects like virtual kubelet, which couples the Kubernetes interface with the isolation and scalability of Azure ACI or AWS Fargate (e.g. what if you didn’t have to solve the networking security problem in your Kubernetes cluster? Perhaps soon you won’t!).

Cloud providers have won orchestration, but the other avenues are still ripe for investment.

The conjoined problems of build and configuration, and especially container image management, will remain a major challenge. Keeping images up to date, deploying new versions of images securely, storing images where they can be downloaded quickly (especially across geographic regions) are all hard problems.

Cloud providers will solve pieces of this problem (particularly image storage) over the next few years, but the sheer complexity will prevent commoditization. This is an area where I expect some very constrained new patterns to emerge from the clouds that allow newly developed applications to reduce their load, but which will require sufficiently broad changes to prevent migration of existing software. The defensive moat here is that fast builds are typically dangerous, and safe builds are typically slow: we’ll need to see improved techniques in this space before it’s commoditized by the cloud.

As an example, most cloud builders today don’t cache docker layers because the actions creating them are not consistently reproducible, making it easy to poison the layer cache in subtle ways. This inability to cache aggressively makes generalized offerings significantly slower than a custom build solution. It’ll be exciting to see Bazel’s ideas compete for adoption as possible path to safe and fast application builds, in particularly when combined with container adoption merging application and environment builds into atomic artifacts.

Application configuration, such as that provided by Consul or Etcd, will continue to be tightly enough coupled with application code and behavior to prevent commoditization by the cloud. Expect to see secret management tooling like AWS KMS to feature creep towards solving the entire application configuration space over time.

Multi-region redundancy continues to require application level architecture and design, and I expect that to remain true until it’s eventually commoditized in the storage layer. GCP is attempting a “multi-region by default” strategy, most visible in the Cloud Spanner offering, but at this point adopting it would require completely buying into their platform and accepting their vendor lock-in. I’m also not familiar with many companies using that offering, yet, as it’s quite new. Ultimately, I don’t think GCP’s multi-region by default strategy can succeed until other cloud providers offer similar offerings to defray the lock-in risks, and consequently I think this is a safe area for investment for internal infrastructure for the next several years.

Storage

One of AWS’ earliest successes was their Simple Storage Service, storing files cheaply and reliably. Offerings have continued to expand to NoSQL offerings, relational databases and, more recently, queues (Kinesis, Pub/Sub).

There are still quite a few areas ripe for internal investment:

- While key-value point lookups will be commoditized, managing secondary indices will remain sufficiently coupled with application logic to prevent being absorbed by the cloud. (I would argue that key-value point lookups are not fully commoditized yet due to the price advantage of running your own e.g. Cassandra cluster versus using Dynamo, but I expect cloud providers to erode this price advantage over the next five to ten years, and honestly I suspect they’ve already eroded the fully loaded cost.)

- Strong consistency at scale remains elusive in the cloud, with the exception of Cloud Spanner.

- Schemas registration/enforcement/migration on unstructured data, especially around data going into queues.

- Graph databases continue to be extremely useful for some categories of machine learning and network problems, and so far there are no strong offerings in the cloud (e.g. Titan.)

- Storage is where existing multi-region offerings are the most limited. Any reasonable multi-region story around storage will continue to require major internal investment. Cloud Spanner and CosmoDB are interesting exceptions here, although I’m not aware of wide adoption at scale.

- Compliance and data locality requirements like GDPR continue to evolve more rapidly than the cloud is currently able to support. Long term I anticipate cloud providers commoditizing this. Even today, they’re far better situated to spin up new regions in countries that introduce new data and compliance legislation, but they haven’t done much to capture this opportunity yet (although Azure appears somewhat ahead of the curve).

- Managing database snapshots remains a complex, nuanced area as well. The cloud has built primitives, but they still require stitching together and maintenance for e.g. managing across environments.

Batch, streaming and machine learning

Machine learning is getting tremendous press lately, and cloud providers are eager to serve. That’s not to say that the more traditional areas of data processing aren’t rapidly evolving, both batch and streaming computation are evolving quickly as well.

Perhaps the common theme is that they are evolving too quickly, with more experiments than standards:

- Literally dozens of things from AWS, dozens more from GCP, and even more from Microsoft Azure.

- Google Dataflow is a powerful and low-maintenance solution for bounded and unbounded event computation.

- AWS Ironman is a complete machine learning ecosystem.

- I think the AWS Deep Learning AMIs are worth calling out as an interesting play and a good example of API standardization as they support Google’s TensorFlow APIs. Managing your own imagines, and especially upgrading all of the dozens of underlying libraries as they continue to push out new releases every month or two, is a challenging and toilsome exercise, and is a more interesting and potentially impactful experiment than might be obvious.

- Custom hardware, like Google’s TPUs are a place where cloud providers are going to invest deeply to compete with each other, and where internal providers simply can’t get the economies to compete.

That said, most of these platforms are still very opinionated, constrained or lack broad adoption. Given how quickly the data ecosystem is evolving and how slow big-data migrations tend to be, I think this space will continue to be wide open for internal investment. (Even things you really want to be fully commoditized, like Hadoop ecosystem management, have continued to require internal attention at scale.)

There are interesting, rapidly evolving external factors here, like the aforementioned GDPR, and leading to a wave of related solutions like Apache Atlas. When you combine these factors with where the edges of data is going, into avenues like tuning machine learning models, data continues to be ripe for internal investment.

Workflows, tools and users

Pulling it all together, the message I want to emphasize is not that the cloud is eating our profession, but rather that we have a really special opportunity to evolve our focuses and strategies to take advantage of the cloud. By picking the right strategies and investment areas today, we’ll position ourselves to be doing much more interesting work tomorrow, and be much more valuable to our users and our companies along the way.

By focusing on the workflows (alerting, incident management, debugging, deployment, build, provisioning/scaling/deprecating applications, …), the interfaces (containers, protocols, CLIs, GUIs …) and our users’ needs: the most interesting problems are still to come.

Thanks you Bobby, Kate, Lachlan, Neil, Vicki and Uma for your help.