Poking around OpenAI.

I haven’t spent much time playing around with the latest LLMs, and decided to spend some time doing so. I was particularly curious about the usecase of using embeddings to supplement user prompts with additional, relevant data (e.g. supply the current status of their recent tickets into the prompt where they might inquire about progress on said tickets). This usecase is interesting because it’s very attainable for existing companies and products to take advantage of, and I imagine it’s roughly how e.g. Stripe’s GPT4 integration with their documentation works.

To play around with that, I created a script that converts all of my writing into embeddings, tokenizes the user-supplied prompt to identify relevant sections of my content to inject into an expanded prompt, and sent that expanded prompt to OpenAI AI’s API.

You can see the code on Github, and read my notes on this project below.

References

This exploration is inspired by the recent work by Eugene Yan and Simon Willison. I owe particular thanks to Eugene Yan for his suggestions to improve the quality of the responses.

The code I’m sharing below is scrapped together from a number of sources:

- OpenAI Cookbook on Question Answering using Embeddings

- OpenAI Cookbook on preparing data for use in embeddings

- OpenAI Cookbook on creating embeddings

I found none of the examples quite worked as documented, but ultimately I was able to get them working with some poking around, relearning Pandas, and so on.

Project

My project was to make the OpenAI API answer questions with awareness of all of my personal writing from this blog, StaffEng and Infrastructure Engineering. Specifically this means creating embeddings from Hugo blog posts in Markdown to use with OpenAI.

You can read the code on Github. I’ve done absolutely nothing to make it easy to read, but it is a complete example, and you could use it with your own writing by changing Line 112 to point at your blog’s content directories. (Oh, and changing the prompts on Line 260.

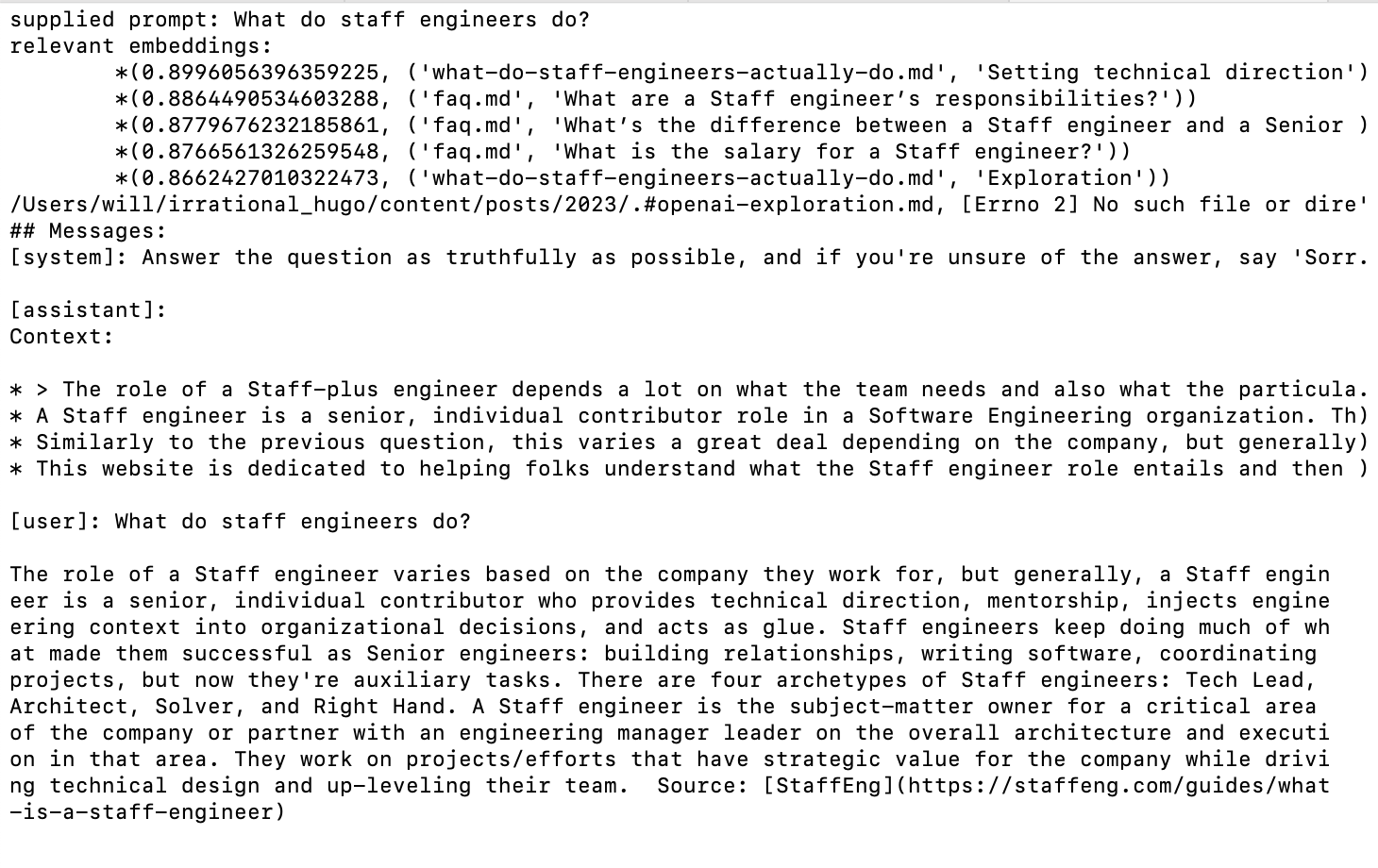

You can see a screenshot of what this looks like below.

This project is pretty neat, in the sense that it works. It did take me a bit longer than expected, probably about three hours to get it working given some interruptions, mostly because the documentation’s examples were all subtly broken or didn’t actually connect together into working code. After it was working, I inevitably spent a few more hours fiddling around as well. My repo is terrible code, but is a full working code if anyone else had similar issues getting the question answering using embeddings stuff working!

The other comment on this project is that I don’t really view this as a particularly effective solution to the problem I wanted to solve, as it’s performing a fairly basic k-means algorithm to match tokenized versions of my blog posts against the query, and then injecting the best matches into the GPT query as context. Going into this, I expected, I dunno, something more sophisticated than this. It’s a very reasonable solution, and a cost efficient solution because it avoids any model (re)training, but feels a bit more basic than I imagined.

Also worth noting, the total cost to developing this app and running it a few dozen times: $0.50.

Thoughts

This was a fun project, in part because it was a detour away from what I’ve spent most of my time on the last few months, which is writing my next book. Writing and editing a book is very valuable work, but it lacks the freeform joy of hacking around a small project with zero users. Without overthinking or overstructuring things too much, here are some bullet points thoughts about this project and expansion of AI in the industry at large:

- As someone who’s been working in the industry for a while now, it’s easy to get jaded about new things. My first reaction to the recent AI hype is very similar to my first reaction to the crypto hype: we’ve seen hype before, and initial hype is rarely correlated with long-term impact on the industry or on society. In other words, I wasn’t convinced.

- Conversely, I think part of long-term engineering leadership is remaining open to new things. The industry has radically changed from twenty years ago, with mobile development as the most obvious proof point. Most things won’t change the industry much, but some things will completely transform it, and we owe cautious interest to these potentially transformational projects.

- My personal bet is that the new AI wave is moderately transformative but not massively so. Expanding on my thinking a bit, LLMs are showing significant promise at mediocre solutions to very general problems. A very common, often unstated, Silicon Valley model is to hire engineers, pretend the engineers are solving a problem, hire a huge number of non-engineers to actually solve the problem “until the technology automates it”, grow the business rapidly, and hope automation solves the margins in some later year. LLM adoption should be a valuable tool in improving margins in this kind of business, which in theory should enable new businesses to be created by improving the potential margin. However, we’ve been in a decade of zero-interest-rate policy which has meant that current-year margins haven’t mattered much to folks, which implies that most of these ideas that should be enabled by improved margins should have already been attempted in the preceeding margin-agnostic decade. This means that LLMs will make those businesses better, but the businesses themselves should have already been tried, and many of them have failed ultimately due to market size preventing required returns moreso than margin of operating their large internal teams to mask over missing margin-enhancing technology.

- If you ignore the margin-enhancement opporunties represented by LLMs, which I’ve argued shouldn’t generate new business ideas but improve existing business ideas already tried over the last decade, then it’s interesting to ponder what the sweet spot is for these tools. My take is that they’re very good at supporting domain experts, where the potential damaged caused by inaccuracies is constrained, e.g. Github Copilot is a very plausible way to empower a proficient programmer, and a very risky way to train a novice in a setting where the code has access to sensitive resources or data. However, to the extent that we’re pushing experts from authors to editors, I’m not sure that’s an actual speed improvement for our current generation of experts, who already have mastery in authorship and (often) a lesser skill in editing. Maybe there is a new generation of experts who are exceptional editors first, and authors second, which these tools will foster. If that’s true, then likely the current generation of leaders is unable to assess these tools appropriately, but… I think that most folks make this argument about most new technologies, and it’s only true sometimes. (Again, crypto is a clear example of something that has not overtaken existing technologies in the real world with significant regulatory overhead.)

Anyway, it was a fun project, and I have a much better intuitive sense of what’s possible in this space after spending some time here, which was my goal. I’ll remain very curious to see what comes together here as the timeline progresses.