Measuring an engineering organization.

For the past several years, I’ve run a learning circle with engineering executives. The most frequent topic that comes up is career management–what should I do next? The second most frequent topic is measuring engineering teams and organizations–my CEO has asked me to report monthly engineering metrics, what should I actually include in the report?

Any discussion about measuring engineering organizations quickly unearths strong opinions. Anything but sprint points! Just use SPACE! Track incident frequency! Wait, absolutely do not track incident frequency! Just make a pretty chart, they won’t look at it anyway! All of these answers, even the last one, have a grain of truth to them, but they skip past an important caveat: there are many reasons to measure engineering, many stakeholders asking for metrics, and addressing each requires a different approach.

There is no one solution to engineering measurement, rather there are many modes of engineering measurement, each of which is appropriate for a given scenario. Becoming an effective engineering executive is adding more approaches to your toolkit and remaining flexible about which to deploy for any given request.

You can use the Engineering Metrics Update template to report on these measures.

This is an unedited chapter from O’Reilly’s The Engineering Executive’s Primer.

Measuring for yourself

When you enter a new executive role, your CEO will probably make an offhand remark that you will, “need to prepare an engineering slide for the next board meeting in three weeks.” That’s a very focusing moment–how do I avoid embarrassing myself in my first board meeting!–but even if your first concrete testament to measurement is a board slide, I don’t recommend starting your measurement efforts by instrumenting something that the board will find useful.

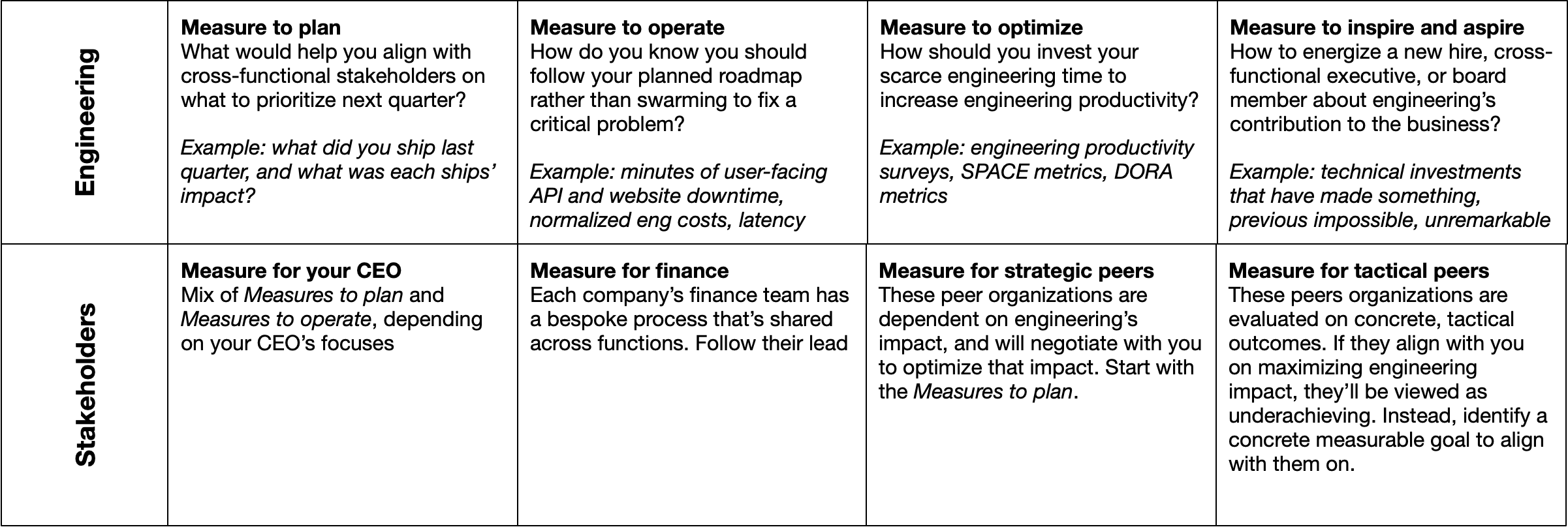

Instead, start by measuring for yourself. What is the information that you need to operate the engineering organization effectively? As you start to document your measurement goals, I recommend starting with these four distinct buckets:

Measure to plan: what would help you align with cross-functional stakeholders to decide what to ship in a given quarter, half or year? Critically, this is not about how you ship (“are we using agile?”), rather your goal is to support project selection and prioritization. You want a single place that shows the business, product, and engineering impacts of work. You might argue that planning is the product team’s responsibility, but only engineering can represent the full set of projects that engineering works on (e.g. product’s unlikely to roadmap your database migration).

Start by tracking the number of shipped projects by team, and the impact of each of those projects

Measure to operate: what do you need to know to be confident that your software and teams are operating effectively on a day-to-day basis? It can be helpful to think of these as quality measures, because the real question behind them is: how do you know you should be following your planned roadmap rather than swarming to fix a critical problem?

Some good places to start are: tracking the number of incidents (each connected to an incident review writeup), minutes of user-facing API and website downtime, latency of user-facing APIs and websites, engineering costs normalized against a core business metric (for example, cost to serve your most important API, calculated simply by dividing API requests per month by engineering spend for that month), user ratings of your product (e.g. app store ratings), and a broad measure of whether your product’s onboarding loop is completing (e.g. what percentage of users reaching your website successfully convert into users?)

Measure to optimize: what do you need to know to effectively invest time into improving engineering productivity? If you look at engineering as a system, how would you understand that system’s feedback loops? It’s worth noting that this tends to be how engineers themselves measure their experience working within your company.

Start with SPACE or its predecessor, Accelerate. If those are too heavy, then start with a developer productivity survey

Measure to inspire and aspire: how can you concisely communicate engineering’s transformational impact to the business? How would you energize a potential hire, new cross-functional executive, or board member about engineering’s contribution to making the business possible?

Maintain a list of technical investments that turned something impossible (“we can’t make breaking API changes without causing production impact to our entire user base”) into something so obvious that it isn’t really worth mentioning (“we allow each individual API integration to upgrade API versions at their convenience rather than on our timeline”). When you speak at company meetings, anchor on these kinds of generational improvements, and try to include at least one such improvement into every annual planning cycle

A frequent rejoinder when you discuss measurement is whether you’re measuring enough–shouldn’t we also be measuring database CPU load, pull request cycle time, etc. The secret of good measurement is actually measuring something. The number one measurement risk is measuring nothing because you’re trying to measure everything. You can always measure more, and measuring more is useful, but measure across many small iterations, and don’t be afraid to measure something quick and easy today.

Measuring for stakeholders

One reason to focus on quick measurements rather than perfect measurements is that there are a lot of folks within your company that want you to measure stuff. There’s stuff that you find intrinsically valuable to measure, but there’s also stuff that your CEO wants you to measure, that Finance is asking you for, to present at monthly status meetings, and so on.

While there are quite a few asks, the good news is that the requests are common across companies, and can usually be mapped into one of these four categories:

Measure for your CEO or your Board. Many experienced managers, likely including your CEO, manage through inspection. This means they want you to measure something, set a concrete goal against it, and share updates on your progress against that goal. They don’t have an engineering background to manage your technical decisions, so instead they’ll treat your ability to execute against these goals as a proxy for the quality of your engineering leadership. If they dig into your measurements, they’ll focus on engineering’s contribution to the business strategy itself, rather than the micro-optimizations of how engineering works day-to-day.

These measures don’t need to be novel, and your best bet is to reuse measurements for your own purposes. Most frequently that is reusing your measures for planning or operations.

The biggest mistake to avoid is asking your CEO to measure engineering through your Metrics to optimize. Although intuitively you can agree that being more efficient is likely to make engineering more impactful, most CEOs are not engineers and often won’t be able to take that intuitively leap. Further, being highly efficient simply means that engineering could be impactful, not that it actually is impactful!

Measure for finance. Finance teams generally have three major questions for engineering. First, how is actual headcount trending compared to budgeted headcount? Second, how are actual vendor costs trending compared to budgeted vendor costs? Third, which engineering costs can be capitalized instead of expensed, and how do we justify that in the case of an audit?

You will generally have to meet finance where they are within their budgeting processes, which vary by company but generally are unified within any given company. The capitalization versus expensing question is more nuanced and deserves detailed discussion; I will say that the default approaches tend to be cumbersome, but there is almost always a reasonable intersection between the priorities of Finance, auditors and engineer that slightly disappoint each party while still meeting all parties’ requirements.

Although I’ve rarely had finance ask for it, I find it particularly valuable to align with finance on engineering’s investment thesis for allocating capacity across business priorities and business units. At some point business units within your company will start arguing about how to attribute engineering costs across different initiatives (because engineering costs are high enough that allocating too many will make their new initiative incur a heavy loss rather than show promise), and having a clearly documented allocation will make that conversation much more constructive

Measure for strategic peer organizations. Your best peer organizations are proactive partners in maximizing engineering’s business impact. Optimistically, this is because these functions are led by enlightened business visionaries. More practically, it’s because their impact is constrained by engineering’s impact, so by optimizing engineering’s impact, they simultaneously optimize their own. It’s common to find product, design and sales functions who are well-positioned for strategic partnership with engineering.

You can usually use the same metrics you need for planning to align effectively with strategic peers organizations

Measure for tactical peer organizations. While strategic peer organizations may agree to measure engineering on the impact of its work, more tactical organizations will generally demand to measure engineering based on more concrete outcomes. For example, a Customer Success organization may push engineering to be measured by user ticket acceptance rate and resolution time. Legal may similarly want to measure you on legal ticket resolution time. Tactical organizations are not tactical because they lack the capacity for strategic thinking, but rather because their organization is graded in a purely tactical way. If they align with engineering to maximize engineering’s impact, their organization will be perceived as underachieving.

Tactical organizations are held accountable to specific, concrete numbers, and likewise want to hold engineering accountable to specific, concrete numbers of their own. Find something that you and the peer organization are able to agree upon, identify a cadence for discussing how effective that measure is at representing the real cross-organizational need, and iterate over time

Measuring for stakeholders is often time consuming, and at times can even feel like a bit of a waste. When done properly, it still is time consuming, but saves time because it consolidates messy, recurring discussions into simpler, more predictable contracts about maintaining specific, measurable outcomes.

Sequencing your approach

The list of stuff to measure for your own purposes is long enough, once you add on the stakeholder asks, the combined list can be exceptionally long. Indeed, it’s a truth universally acknowledged, that an engineering executive must be in want of more engineers to support measuring their existing list of “must have” metrics. You’ll never actually measure everything you want. However, over the course of a year or two, you’ll be able to instrument the most important, iterate on them, and build confidence in what they actually mean.

I tried to create a concrete list of exactly what you should measure first to avoid spreading yourself too thin, but it wasn’t that useful. There’s just too much context for a rote list. Instead, I offer three rules for sequencing your measurement asks:

- Some things are difficult to measure, only measure those if you’ll actually incorporate that data into your decision making. If you’re unlikely to change your approach or priorities based on the data, then measure something simple instead, even if it’s just a proxy metrics

- Some things are easy to measure, be willing to measure those to build trust with your stakeholders, even if you don’t find them exceptionally accurate. Most stakeholders will focus more on the intention and effort than the actual output. It’s an affirmation that you’re willing to be accountable for your work, not a testament to the thing being measured

- Whenever possible, only take on one new measurement task at a time. Measurement is surprisingly challenging. Instrumentation can go awry. Data can be subtly wrong. Bringing on many new measures at once takes a lot of time from you or the subset of your organization that has a talent for validating new data, and doing many measures at once is a challenge for most organizations

Work through measuring something from each category to establish a baseline across these dimensions. Then, rather than being finished, you’ll likely want to start again, albeit with a bit less priority and focus. Measurement is not a one-time task, but instead an ongoing iteration. There will always be situational context to your specific situation that may lead you to prioritize a slightly different measurement approach.

That’s fine! Don’t get caught up in following this plan exactly, take the parts that are most useful.

Anti-patterns

Measurement is ripe with anti-patterns. The ways you can mess up measurement are truly innumerable, but there are some that happen so frequently that they’re worth calling out:

Focusing on measurement when the bigger issue is a lack of trust. Sometimes you’ll find yourself in a measurement loop. The CEO asks you to provide engineering metrics, you provide those metrics. Instead of seeming satisfied, the CEO asks for another, different set of metrics. Often the underlying issue here is a lack of trust. While metrics can support building trust, they are rarely enough on their own.

Instead, push the CEO (or whoever remains frustrated despite bringing more metrics) on their frustration, until you get to the bottom of the concern masquerading as a need for metrics

Don’t let perfect be the enemy of good. Many measurement projects never make progress because the best options require data that you don’t have. Instrumenting that data gets onto the roadmap, but doesn’t quite get prioritized. A year later, you’re not measuring anything.

Instead, push forward with anything reasonable, even if it’s flawed

Using optimization metrics to judge performance. It’s easy to be tempted to use your optimization metrics to judge individual or team performance. For example, if a team is generating far fewer pull requests than other teams their size, it’s easy to judge that team as less productive. That may be true, but it certainly might not.

Instead, evaluate teams on based on their planning or operational metrics

Measuring individuals rather than teams. Writing and operating software is a team activity, and while one engineer might be focused on writing code this sprint, another might be focused on being glue to make it possible for the first engineer to focus. Looking at individual data can be useful for diagnostic purposes, but it’s a poor tool for measuring performance.

Instead, focus on measuring organization and team-level data. If something seems off, then consider digging into individual data to diagnose, but not to directly evaluate

Worrying too much about measurements being misused. Many leaders are concerned that their CEO or Board will misuse data. For example, they might throw a fit that many engineers are only releasing code twice a week: it’s that a sign that the engineers are lazy? Recognize that these lines of discussion are very frustrating, but also that avoiding it doesn’t fix them!

Instead, take the time to educate stakeholders who are interpreting data in unconstructive ways. Keep an open mind, there is always something to learn, even if a particular interpretation is in error

Deciding measures alone rather than in community. I do recommend coming to measurement with a clear point of view on what you’ve seen work well, but that should absolutely be coupled with multiple rounds of feedback and iteration. Particularly when you’re new to a company, it’s easy to project your understanding of your last job onto the new one, which erodes trust.

Instead, build trust by incorporating feedback from your team and peers

If you’ve avoided these, you’re not guaranteed to be on the right path, but it’s fairly likely.

Postscript: Building confidence in data

After you’ve rolled out your new engineering measures, you may find that they aren’t very useful. This may be because different parties interpret them in different ways (“Why is Finance so upset about database vendor costs increasing when our total costs are still under budget?”). It may even be that the measures conflict with teams’ experiences of working with the systems (“Why does the Scala team keep complaining about build latency when average build latency is going down?”).

The reality is that measurement is not inherently valuable, it is only by repeatedly pushing on data that you get real value from it. A few suggestions for pushing on data to extract more value from it:

- Review the data on a weekly cadence. Ensure that review looks at how the data has changed over the last month, quarter or year. Whenever possible, also establish a clear goal to compare against, as both setting and missing goals will help focus on the areas where you have the most to learn

- Maintain a hypothesis for why the data changes. If the data changes in a way that violates your hypothesis (“cost per request should go down when requests per second go up, but in this case the cost went up as requests per second went up, why is that?”), then dig in until you can fully explain why you were wrong. Use that new insight to refine both your hypothesis and your tracked metrics

- Avoid spending too much time alone with the data, instead bring others with different points of view to push on the data together. This will bring together many different hypotheses for how the data reacts, and will allow the group to learn together

- Segment data to capture distinct experiences. Reliability and latency in Europe will be invisible–and potentially quite bad–if your datacenters and the vast majority of your users are in the United States. Similarly, Scala, Python and Go will have different underlying test and build strategies; just because build times are decreasing on average doesn’t mean Scala engineers are having a good build experience

- Discuss how the objective measurement does or does not correspond with the subjective experience of whatever the data represents. If builds are getting faster, but don’t feel faster to the engineers triggering them, then dig into that–what are you missing?

There are, of course, many more ways to push on data, but following these will set you on the right path towards understanding the details and limits of your data. If you don’t spend this sort of time with your data, then it’s easy to be data-driven, highly-informed, and also come to the wrong conclusions. Some of the worst executive errors stem from relying too heavily on subtly flawed data, but it’s avoidable with a bit of effort.