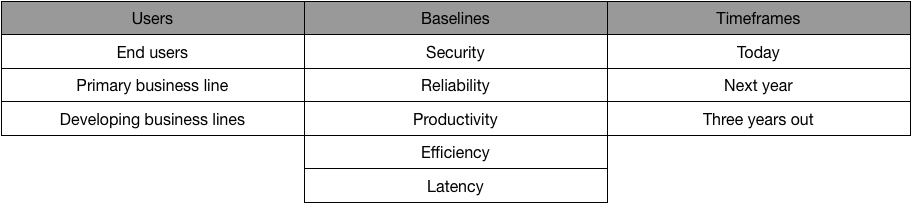

Infrastructure planning: users, baselines and timeframes.

Technical infrastructure is never complete. System processes can always run with less overhead or be bin-packed onto fewer machines. Data can be retrieved more quickly and stored at a cheaper cost per terabyte. System design can broaden the gap between failure and user impact. Transport layers can be more secure.

The sheer variety of investable projects is overwhelming. There are always new technologies to adopt or finish adopting: Docker, Kubernetes, Envoy, GKE, HTTP/2, GraphQL, gRPC, Spark, Flink, Rust, Go, Elixir are just the beginning of your options. Add cloud vendor competition, and the rate of change is pretty staggering.

With such a broad problem domain filled with so many possible solutions, I’ve sometimes found it difficult to provide guidance for infrastructure teams to prioritize their work. Originally, I thought this was because I lacked depth in some facets, but I slowly came to realize it was equally difficult for the teams themselves to prioritize their own work: there were simply too many options.

A couple of years ago I put together an infrastructure planning framework I referred to as the five properties of infrastructure, which worked well at a certain degree of complexity, but recently hasn’t been providing the degree of guidance we needed. As such, over the past couple of months I’ve iterated onto a second framework: users, baselines and timeframes.

Let’s dig into the original and new framesworks!

Five properties of infrastructure

When we put together our first infrastructure planning framework, the issues we wanted to provide clearer guidance around were:

- security and reliability first, then everything else: we wanted every single team to have a consistent culture and approach of prioritizing security and reliability work before all other work,

- prioritizing important but not critical work: there was enough critical work that a successful framework would provide support for shifting from a short-term focus and towards long-term investment,

- resourcing the long tail of opportunities: some organizations ignore cost efficiency and latency concerns until they find themselves sipping coffee in the burning kitchen of meme, and we wanted to make sure we funneled a small but consistent quantity of resources to these areas.

Our solution was a ranked list of infrastructure properties, all of which we’d do some work on, doing the most for those higher in the list:

- Security is more than “locking things down”, it’s streamlining work so that the same tasks can be accomplished more securely with the same level of effort.

- Reliability is uptime, harvest, and yield, hardening your interfaces, and ensuring your oncall load is low enough that alerts are actioned instead of ignored.

- Productivity is making the engineers supported by your team more effective through potent primitives and abstractions more potent (some would argue function-oriented computing is such an improvement over service-oriented computing), or by offering entirely new capabilities.

- Efficiency is providing the same service at a lower cost. This can be reducing maintenance overhead to spend more time on features, and it can also be better picking more appropriate AWS or GCP instances.

- Latency is both reducing time to reach your servers (through points of presence, an active-active multi-region/datacenter topology, or connection pooling with established connections), and also time within your stack (via better load balancing strategies, request tracing to find latency hot spots, or optimizing database queries).

This wasn’t a perfect framework, but it was quite useful during planning sessions. In particular, it was good at balancing focus across internal and external users, and avoiding a common infrastructure pitfall of self-dealing during prioritization.

However, there were some important questions that this framework didn’t help answer:

- Security is ranked above reliability, but it’s clear that some reliability work is more important than some security work. How do we make these trade offs?

- Within a given category, there is an infinite amount of possible work. How do we narrow our focus to remain aligned with our users’ needs? Also, which users needs?

- How will work to improve lower ranked priorioties, such as latency, ever get prioritized?

- How should we prioritize between these two users’ security asks?

So we went back to the drawing board to build on what worked.

An aside on creativity

Before jumping into the next framework, a quick comment on creativity. Some of the most valuable work we do as leaders is to avoid accepting trade offs as inevitable, and instead treat them as accidental.

Workarounds abound.

It’s often possible, with subject matter expertise and creativity, to “have both.” I think of this as getting paid twice, and this is one of the important arguments for why you should avoid teams becoming exhaustively busy: tired folks are not creative.

Users, baselines and timeframes

As we sat down to iterate on our infrastructure prioritization framework, the things we particularly wanted to improve were:

- Most planning periods should prioritize a healthy mix of security, reliability, productivity, efficiency and latency work.

- Project mix should be reactive to our problems—if developer productivity decreased significantly, it should prioritize more investment there—and to our users’ needs.

- Avoid overfitting on our current capabilities, especially SLAs. The platform we aspire offer five years from now should be an order of magnitude improvement on what we offer today.

- Protecting our ability to invest into the sorts of work we’ll need to undertake to accomplish those order of magnitude improvements.

- Provides structure for explaining our tradeoffs to our users.

We spent some time reflecting on what did and didn’t work well in the previous iteration, as well as those goals for improvement. Emerging from that reflection, the system we developed is:

- Baselines are set for every infrastructure property: security, reliability, productivity, efficiency and latency. These are in effect the service level objectives we commit to providing. (More on baselines.)

- Timeframes are the different eras across which we ensure we’ll maintain our baselines. What work do we need to maintain our baselines this year? What about next year? Five years out?

- Users are the folks, internal and external, who we enable and support. Can we group our users into cohorts with similar needs? Can we provide a breakdown of how much time we want to invest into which cohort for the next year? Two years?

Now we start the planning cycle with a list of user asks, and then merge in projects needed to maintain our baselines across appropriate timeframes. When users ask to understand why we’re doing infrastructure work instead of an alternative project, we can explicitly connect our investments to business value.

While this isn’t a perfect tool, I’ve found it to be a powerful and effective way to explain priorities and tradeoffs.

One of the most interesting early questions that came up during developing this guidelines was whether infrastructure teams should list themselves as each other customers.

For the overall organization, I believe the answer here is generally no. The measure of an infrastructure organization’s impact is the discounted future product development velocity it will enable, and something this model emphasizes is that all self-investment is done on behalf of increased future product velocity.

Conversely, at the team level within an infrastructure organization, I think it’s very important to recognize the other infrastructure teams as your users. This is particularly true because good infrastructure offerings are composable, building upon other infrastructure offerings to allow folks to pick appropriate productivity:property tradeoffs for their projects.

Resourcing

When folks read frameworks such as these, the—quite appropriate—first question is generally, “Do you actually do this?” In this case, we’re winding down our latest planning process for the team I work with, and what I’ve written here is a fairly accurate, somewhat abstracted, and slightly idealized version of what we did.

The one omitted bit is that we also layer on a simple resourcing framework to ensure we’re investing into sustainable operable systems and support investing in the kinds of long-term projects that will shift our baselines in ways that tactical work doesn’t.

The current iteration of that resourcing framework is:

- User asks (40%) - our largest chunk of work goes directly towards users asks (as prioritized by users, baselines and timeframes). Our biggest bucket of work is immediately improving our users lives.

- Platform quality (30%) - maintaining our current and future baselines. This is also our budget to reduce toil in operating our systems. This isn’t the only time we prioritize baseline work, sometimes those baselines are user asks as well, this is instead the minimum amount of time we spend shoring up the baselines.

- Key initiatives (30%) - each half we select one to three very large projects at the engineering “group” level (in this case, a bit over one hundred engineers) that solve an entire category of user asks or provide a generational leap in hitting our baselines. We scope the projects to what we can finish in six months; then we finish that work in six months. This protects our ability to innovate, while avoiding never-ending projects.

The framework is super simple, but I’ve found it works well. In particular, it ensures that the right questions get asked during the resourcing process, which is the ultimate goal of resourcing and planning discussions.

I’d be very curious to hear from y’all how your teams do infrastructure planning. What has worked well for you? What hasn’t? What are your biggest challenges?