Designing interview loops.

Anyone who has flipped through Cracking the Coding Interview knows that evaluating folks for a new role is a coarse science. Most interviewers are skeptical of the accuracy of their interviews, and it’s hardly the rare interview retrospective where folks aren’t sure they got enough signal on a candidate to hire with confidence.

Although a dose of caution about interview accuracy is well-founded, I’m increasingly convinced that a bit of structure and creativity leads to interview loops that give clear signal on whether a candidate will succeed in the role, and can do so in a fair and consistent fashion.

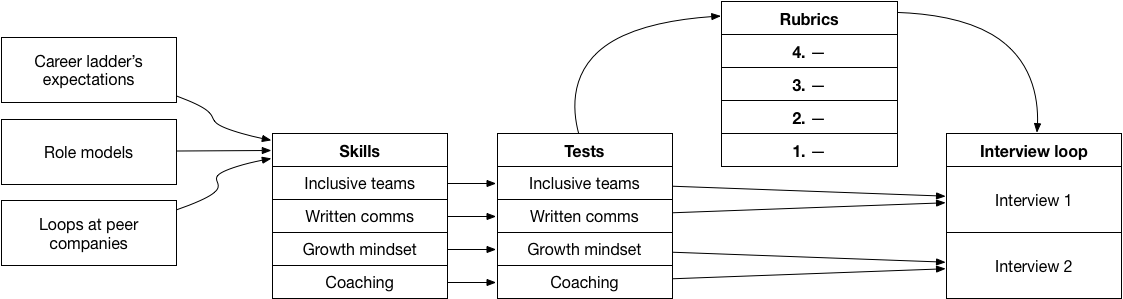

The approach I’ve started using to design interview loops is:

- Metrics first. Do not start designing a new interview loop until you’ve instrumented your hiring funnel. It’s pretty hard to evaluate your loop or improve it without this data, so don’t undertake an improvement project without it.

- Understand current loop’s performance. Take time to identify what you think does and doesn’t work well in your current process. Three sources that are useful are:

- Funnel performance. Where do folks drop off in your funnel? How does your funnel benchmark against your peers at similar companies?

- Employee trajectory. For all the folks you have hired, understand how their work performance relates to their interview performance. What elements correlate heavily with success, and which appear to filter on things that end up not contributing to performance in the job?

- Candidate debriefs. Try to schedule calls with everyone who goes through your process, especially with folks who drop out.

- Learn from peers. Chat with folks who have interviewed at other companies for the kind of role you’re hoping to evaluate. You’re not looking to copy elements verbatim, but rather to get a survey of existing ideas.

- Find role models. Write up a list of ideal candidates for the role and write down what makes them ideal. Be deliberate about ensuring your list of role models includes women and underrepresented minorities, helping avoid matching on correlating traits in a less diverse set of role models.

- Identify skills. From your role models and your career ladder, identify a list of skills that are essential to the candidate’s success in the role they’ll be interviewing for. Take a bit of time to rank those skills from most to least important.

- Test for each skill. For each skill, design a test to evaluate the candidates strength. Whenever possible, prefer tests that have a candidate show their strength, avoiding formats where they tell you about it. For example, we had an interview where folks described their experience building a healthy team, and replaced it with an interview where folks are given results from an organization health survey and asked to identify issues and propose how they’d address them.

- Avoid testing for polish. Many interviews accidentally test for the candidate’s polish as opposed to any particular skill. This is particularly true for experiential interviews where folks are asked to describe their work, and less common in interviews where they demonstrate skills. That isn’t to say that you shouldn’t deliberately test for polish, it’s quite useful, just that you shouldn’t do it inadvertently.

- For each test, a rubric. Once you’ve identified your tests, write a rubric to assess performance on each test. Good rubrics include explicit scores and criteria for reaching each score. If you find it difficult to identify a useful rubric for a test, then you should look for a different test that is easier to assess.

- Avoid boolean rubrics. Some tests tend to return boolean results, for example, whether someone has experience managing someone out is a good example of a common boolean filter. These are inefficient tests because you pretty quickly get a sense of whether someone has or hasn’t ticked this box, and the rest of the interview doesn’t lend more signal. Likewise, you can almost always answer boolean questions from their resume or in a pre-interview screen.

- Group tests into interviews. Once you’ve identified the tests, group them into sets that can be performed together in a single forty-five minute interview, or whatever duration you prefer. The more cohesive the format and subjects of the tests in a given interview, the more natural it’ll feel for the candidate.

- Run the loop. At this point you have an entire loop ready, and it’s time to start using it. Especially early on, you should be asking candidates what did or didn’t work well, but really you should never stop doing this! Each debrief will uncover some opportunities to improve your rubric or tests.

- Review hiring funnel. After you’ve run the new loop ten to twenty times, review the funnel metrics to see how it’s working out. Are some of the interviews passing too frequently? Are others too hard? Review the results in batch.

- Schedule an annual refresh. As the initial rate of iteration slows down, schedule a review for a year out, and at that point you get to ask yourself if the loop has proven effective for your needs, or if you should restart this process!

At this point, you have a complete interview loop and the systems to guide the loop towards improvement. Beyond this approach, a few more general pieces of guidance:

- Try to avoid design by committee, these almost always lead to incremental change. Prefer a working group of one or two people that is then tested against a bunch of folks for feedback!

- Don’t hire for potential. Hiring for potential is a major vector for bias, and you should try to avoid it. If you do decide to include potential, then spend time developing an objective rubric for potential and ensure the signals it indexes on are consistently discoverable.

- Use your career ladder. Writing a great interview loop is almost identical to writing a great career ladder. If you’ve already written expectations for the role, reuse those as much as possible.

- Iterate on interview a little. When you first create an interview, you should spend time iterating on the interview format, but the rate of change should drop to near-zero after you’ve given it approximately ten times.

- Iterate on the rubric a lot. As folks attempt to apply the interview rubrics, they’ll continue to find edge cases and ambiguities. Continuing to incorporate those into the interview rubrics is an essential way to reduce bias creeping in.

- A/B testing loops. There is a platonic ideal of testing new interviews using the standard mechanisms we use to test other changes, such as A/B testing. At a certain scale, I think that is almost certainly the best approach, but so far I’ve been part of a company with enough volume to usefully conduct such tests. Particularly, these tests are quite expensive, because you need to control for interviewers and have those interviewers trained on both sets of interviews, which ends up taking a long time to reach confidence that a new loop is better.

- Hiring committees. As an alternative to A/B testing, I have found a centralized hiring committee that reviews every candidate’s interview experience to be quite valuable for identifying trends across new loops, and more generally to guide the overall process towards consistency and fairness.

Wrapping all my learnings on designing interview loops together into something pithy: avoid reusing stuff that you know doesn’t work, and instead approach it from first principles with creativity. Then keep iterating based on how it works for candidates!