Rewriting the Yahoo! BOSS Datahub.

In 2008, my approach to blogging was concocting an explosion of content on breaking technologies and hoping that would translate into notoriety, page views, or at least a job beyond teaching English. I attempted this approach to various degrees with PyObjC, Processing.js, Why the Lucky Stiff’s Shoes, and a few others.

This worked fairly well–density, novelty and recency are the necter that tempt the search relevancy gods–and eventually nudged open the door to my first job in technology, working at Yahoo! on Yahoo! Build your Own Search Service (BOSS).

The week that BOSS was announced, I wrote a bunch of blog posts about it: 1. Yahoo! Build Your Own Search Service in Django, 2. Search Recipes for Yahoo! Boss In Python, 3. Polishing Up Our Django & BOSS Search Service, 4. Stripping Reddit from HackerNews With BOSS Mashup. Enough posts that they were confused by the volume and reached out to discuss.

After six months of discussions, dodging layoffs and outlasting headcount freezes, they brought me on as an hourly contractor working remotely from the east coast. I was so green, and was ready to work on or do anything now that I had health insurance again.

The first version of BOSS allowed you to query Yahoo’s search results via an API and to remix the results. That version was already running when I joined the team, and we had just started work on the second version which would allow you to submit your own data and then query against both your own data and Yahoo’s results, merging them together. In some regards a lot like what Swiftype would become.

The product didn’t matter too much to me, I was just glad to be working in technology. No one is willing to write the YUI, Flash, APC integration to show file upload progress? Let me! No one is willing to work on the frontend because it’s paradoxically both (a) beneath them and (b) resolving Mozilla 1.5 and IE6 cross-browser compatibility is exasperatingly difficult? Let me! No one is willing to learn to debug the Erlang workflow manager? Let me!

The team was the sort of confluence that can only happen at a shrinking company whose processes were designed to excel during high growth. Three members of the team had rolled off supporting del.icio.us when Yahoo! moved it into maintenance mode, and the other ten were engaging in the multi-year pursuit of internal customers for a custom extension to Vespa.

Vespa you can think of as ElasticSearch, although at the time the closest comparison was Solr. And the custom extension, called Hosted Vespa, was a shim that ran on top of Vespa and provided multi-tenancy on what had historically been a single-tenant system.

The technology behind Hosted Vespa was perhaps more literal than one might expect from a piece of technology a team had been trying to champion for years. You’d create a single collection that had a number of fields along the lines of:

int1, int2, ... intN

float1, float2, ... floatN

str1”, str2, ... strN

and then we’d create a mapping from each users’ fields to the underlying schema, along the lines of:

age => int1

name => str1

city => “str2

At query and index time, the user fields would be translated into the collection’s fields, leaving the underlying Vespa instance was blissfully unaware it was supporting multiple tenants. This would have been challenging if adoption had taken off, but excessive adoption wasn’t destined to become our core challenge.

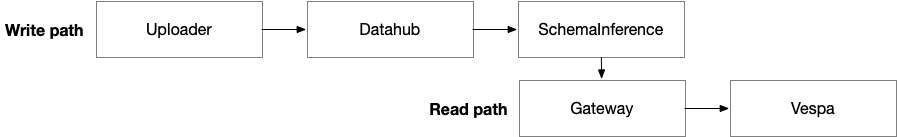

New users would upload an XML file in any format they wanted, run it through our Datahub which normalized the format and sent the normalized data to SchemaInference which performed some regular expression matches against each fields’ values to identify the field’s type and map it to an underlying Vespa field.

As we brought on beta users, we started receiving larger and larger files. These files began to trigger out of memory errors in the Datahub. A 800 MB file would take 8GB of memory, and we were running on the largest physical machines we had access to, which I believe had about 12GB of memory. We were getting files larger than 800 MB, and we couldn’t get larger machines due to the dreaded hardware review process run by David Filo, one of Yahoo’s cofounders, that considered our servers too underutilized to merit more capacity.

This was a problem.

Two issues created this memory expansion. First, the implementation created several accidental copies of the loaded data, which is fairly easy to do when a team experienced with mutating state begins working in a functional programming language like Erlang. Second, it relied upon a DOM parser rather than a SAX parser for parsing and writing XML files.

Agile was the fad du jour, but in practice our team had a very strong culture of individual code ownership, and most components had a single owner who was the only person who would modify or operate that piece of code. This meant that the long pole in fixing problems was often swaying the code owner, and only rarely was implementing a solution the bottleneck. This was particularly true for me, an engineer with one year of experience working on a team of folks who’d been writing software for six to twenty years.

I went to work convincing the Datahub’s owner that a SAX parser parser would use less memory than the DOM parser, but somewhat to my surprise I couldn’t sway them in the slightest. Rather my attempts to sway were a slight. We discussed the theoretical aspect of why incremental parsing a subset of data would use less memory than loading the entire file. We reviewed benchmarks comparing the approaches and showing SAX used less memory. I created a sample implementation comparing the approaches and showing reduced memory utilization.

None of it resonated. My teammate held firm that a DOM parser used less memory, identifying a potential kernel bug that might be causing the memory bloat.

Wanting to solve the OOM problem–and perhaps I was the slightest bit caught up in proving my point after several weeks of disagreement–I attempted updating the implementation’s DOM parser with a SAX parser, but the component was missing tests, making it difficult to know if the replacement worked. Likewise, replacing the parser would only address half the memory bloat issues, there were still the accidental memory copies to consider.

As the trope goes, one weekend I went to work. I started by writing a comprehensive test suite, then I wrote a new implementation from scratch. It wasn’t a very complex system, maybe two thousand lines of code tops, but to the best of my memory the rewrite was around nine hundred lines of code including the tests. The rewrite passed all the tests, it exposed an identical interface so it could be plugged into the existing workflow manager, it was faster to process files, and most importantly benchmarks showed it used a constant amount of memory independent of file size, taking roughly a couple hundred MB per concurrent file. It was simple, it was fast, it worked, and it was a drop-in replacement.

Up it went into a svn branch, and I shared the results with the team.

If you’re anticipating this culminating in the hero narrative, this is not the story for you. It was a different era of engineering management, and over two years I can only remember three one-on-ones with my manager, and you, dear reader, will be unsurprised to know that the second of those one-on-ones came unplanned the following morning when my manager grabbed me from the comfort of my five-foot cubicle walls.

It was a short discussion. “If you were more experienced, I would fire you right now. Delete the rewrite, delete the tests, and never do something like this again.” So I deleted the rewrite. I deleted the tests. Soon thereafter the Microsoft-Yahoo! search deal paused our v2 launch, and a year later the team was wound down.

The Datahub never processed files larger than 800 MB, nor did it eever get tests, but I got the message. Six months later I left for bigger and better things at Digg.

It’s taken me some years to decide what I wanted to take away from my time at Yahoo!, and from this particular parable of the Datahub. I look back on my behavior with regret: I can’t imagine a maneuver more detrimental to a team’s psychological safety than rewriting someone else’s work. I can also recognize it as what it was: a misguided attempt to unstick a struggling team when I had no experience, little awareness, and far fewer tools than I have today.

What has stuck with me most is an echo of what a member of my church advised me when I applied to college: “Don’t focus on the quality of the teachers, they’ll be excellent everywhere, focus on the quality of your peers.” I suspect there is more variance in the quality and qualities of company leadership than undergraduate education, but it’s been irrefutably true that what I’ve learned and accomplished has directly corresponded to the teams I’ve gotten to work with.