An Introduction to Compassionate Screen Scraping

Screen scraping is the art of programatically extracting data from websites. If you think it's useful: it is. If you think it's difficult: it isn't. And if you think it's easy to really piss off administrators with ill-considered scripts, you're damn right. This is a tutorial on not just screen scraping, but socially responsible screen scraping. Its an amalgam of getting the data you want and the Golden Rule, and reading it is going to make the web a better place.

We're going to be doing this tutorial in Python, and will use the httplib2 and BeautifulSoup libraries to make things as easy as possible. We'll look at setting things up in a moment, but first a few words about being kind.

Websites crash. They look pretty and reliable and stable, but then a butterfly flaps its wings somewhere and they ride a handbasket straight to hell. For small websites 'hell' is a complete crash, while for bigger websites its more often sluggish performance. Either way, the butterfly flapping its wing is always an unexpected influx of traffic, and often that traffic spike is the direct result of inconsiderable screen scrapers.

For my blog, the error reports I get are all generated by overzealous webcrawlers from search engines (perhaps the most ubiquitous specie of screenscraper). Early on, I would routinely be assaulted by a small research web crawler whose owner was nigh impossible to contact. Not infrequently it would crash things by initiating more http requests than my small vps could handle. The programmers who wrote the scraper weren't being considerate, and it was causing problems.

This brings us to my single rule for socially responsible screen scraping: screen scraper traffic should be indistinguishable from human traffic. By following this rule we avoid placing undue stress on the websites we are scraping, and as an added bonus we'll avoid any attempts to throttle or prevent screen scraping1. We'll use a couple of guidelines to help our scripts feign humanity:

Cache feverently. Especially when you're scraping a relatively small amount of data (and your computer has a lot of memory to store in), you should save and reuse results as much as possible.

Stagger http requests. Although it'll speed things up for you to make all the http requests in parallel, it won't be appreciated. However, even if you make the requests sequentially it can end up being a fairly large hit. Instead, space your requests out by a small period of time. Several seconds between each request assures that you won't be causing problems.

Take only what you need. When screen scraping it's tempting to just grab everything, downloading more and more pages just because they are there. But its a waste of their bandwidth and your time, so make a list of the data you want to extract and stick to it.

Now, armed with those three guidelines, lets get started screen scraping.

Setup Libraries

First we need to install the httplib2 and BeautifulSoup libraries. The easiest way to do this is to use easy_install.

sudo easy_install BeautifulSoup

sudo easy_install httplib2

If you don't have easy_install installed, then you'll need to download them from their project pages at httplib2 and BeautifulSoup.

Choosing a Scraping Target

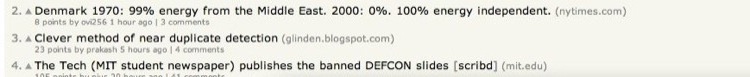

Now lets get scraping. We're going to start small and grow a simple and considerate library for scraping HackerNews. The first step is to consider what data we want to extract. The web interface to HackerNews looks like this:

Thus, from loading only the frontpage we can extract a lot of data about each story: the title, the link, the social score, the poster, when it was posted, and the number of comments. There are 30 hits displayed on each page, so we'll be able to gather a fair bit of data with only retrieving one page.

The Simplest Case

With `httplib2 and BeautifulSoup its quick and easy to extract the data we need from HackerNews. Simple enough to just throw a few commands into the interpreter:

>>> import httplib2

>>> from BeautifulSoup import BeautifulSoup

>>> conn = httplib2.Http(".cache")

>>> page = conn.request(u"http://news.ycombinator.com/","GET")

>>> page[0]

{'status': '200', 'content-location': u'http://news.ycombinator.com/', 'transfer-encoding': 'chunked', 'keep-alive': 'timeout=3', 'connection': 'Keep-Alive', 'content-type': 'text/html; charset=utf-8'}

>>> # page[1] contains the retrieved html.

...

>>> soup = BeautifulSoup(page[1])

>>> titles = soup.findAll('td','title')

>>> titles = [x for x in titles if x.findChildren()][:-1]

>>> subtexts = soup.findAll('td','subtext')

>>> stories = zip(titles,subtexts)

>>> len(stories)

30

>>> stories[0]

(<td align="right" valign="top" class="title">1.</td>, <td class="subtext"><span id="score_272314">38 points</span> by <a href="user?id=yangyang42">yangyang42</a> 5 hours ago | <a href="item?id=272314">8 comments</a></td>)

The bit with titles is necessarily confusing. The titles css class is sued for both the td tags that contain the number of the item (for example 1. or 15.), and for the td tags that contain the a tags with the title of the story and its link. However, we only want the later kind, so we filter out the td tags without any children tags. We're also excluding the last result from that filtered list. That's because the last one contains the More link to view the next 30 results, and is not actually a story itself.

So we're pretty close to what we want, but the data isn't in a very accessible format yet. Lets try to finagle it into something usable. Right now each story is a 2-tuple of Tag objects:

>>> stories[0]

(<td class="title"><a href="http://sethgodin.typepad.com/seths_blog/2008/08/the-secret-of-t.html">The secret of the web (hint: it's a virtue)</a><span class="comhead"> (sethgodin.typepad.com) </span></td>, <td class="subtext"><span id="score_272314">38 points</span> by <a href="user?id=yangyang42">yangyang42</a> 5 hours ago | <a href="item?id=272314">8 comments</a></td>)

What I'd really like is a dictionary containing all of that data. First lets figure out how to extract each piece of the data:

>>> s = stories[0]

>>> s[0].findChildren()[0]['href']

u'http://sethgodin.typepad.com/seths_blog/2008/08/the-secret-of-t.html'

>>> s[0].findChildren()[0].string

u"The secret of the web (hint: it's a virtue)"

>>> s[1].findChildren()[0].string

u'38 points'

>>> s[1].findChildren()[1].string

u'yangyang42'

>>> s[1].findChildren()[2].string

u'8 comments'

>>> s[1].findChildren()[2].string.split(" ")[0]

u'8'

So, we've figured out how to grab everything except how long ago the story was posted. Thus far we were using the findChildren method to navigate the tag tree, but there is also the contents attribute, which allows access to both tags and strings.

>>> s[1].contents

[<span id="score_272314">38 points</span>, u' by ', <a href="user?id=yangyang42">yangyang42</a>, u' 5 hours ago | ', <a href="item?id=272314">8 comments</a>]

>>> s[1].contents[-2].split(" ")

[u'', u'5', u'hours', u'ago', u'', u'|', u'']

>>> s[1].contents[-2].strip().split(" ")

[u'5', u'hours', u'ago', u'', u'|']

>>> s[1].contents[-2].strip().split(" ")[0]

u'5'

In reality, though, its probably easiest to just use a regular expression to extract this data.

>>> import re

>>> txt = unicode(s[1])

>>> txt

u'<td class="subtext"><span id="score_272314">38 points</span> by <a href="user?id=yangyang42">yangyang42</a> 5 hours ago | <a href="item?id=272314">8 comments</a></td>'

>>> DATE_RE = re.compile(" (?P<qty>\d+) (?P<unit>\w+) ago ")

>>> DATE_RE.findall(txt)

[(u'5', u'hours')]

>>> txt = DATE_RE.findall(txt)[0]

>>> txt

(u'5', u'hours')

>>> u"%s %s" % (txt[0],txt[1])

u'5 hours'

Now lets combine this all into one extracting function:

>>> def extract_story(data):

... d = {}

... d['link'] = data[0].findChildren()[0]['href']

... d['title'] = data[0].findChildren()[0].string

... d['score'] = data[1].findChildren()[0].string

... d['poster'] = data[1].findChildren()[1].string

... d['num_comments'] = int(data[1].findChildren()[2].string.split(" ")[0])

... d['time'] = " ".join(data[1].contents[-2].strip().split(" ")[:2])

... return d

...

>>> extract_story(s)

{'title': u"The secret of the web (hint: it's a virtue)", 'poster': u'yangyang42', 'time': u'5 hours', 'score': u'38 points', 'link': u'http://sethgodin.typepad.com/seths_blog/2008/08/the-secret-of-t.html', 'num_comments': 8}

There is only one slight flaw here, and that is when the number of comments for a story is zero, it has discuss displayed instead of 0 comments. We can fix that up pretty quickly, and the improved function looks like this:

def extract_story(s):

d = {}

d['link'] = s[0].findChildren()[0]['href']

d['title'] = s[0].findChildren()[0].string

d['score'] = s[1].findChildren()[0].string

d['poster'] = s[1].findChildren()[1].string

try:

d['num_comments'] = int(s[1].findChildren()[2].string.split(" ")[0])

except ValueError:

d['num_comments'] = 0

d['time'] = " ".join(s[1].contents[-2].strip().split(" ")[:2])

return d

A bit ugly, to be sure, but nothing too complex to understand.

Playing With The Data

Before we move on, lets take a look at how we can easily play with the data in its current form. For example, we can rerank things by their number of comments:

>>> stories = [ extract_story(x) for x in stories ]

>>> stories.sort(lambda a,b: cmp(a['num_comments'],b['num_comments']))

>>> stories[0]['num_comments']

0

>>> stories[-1]['num_comments']

74

>>> stories.reverse()

>>> stories[0]

{'title': u'A Fundraising Survival Guide', 'poster': u'mqt', 'time': u'1 day', 'score': u'123 points', 'link': u'http://www.paulgraham.com/fundraising.html', 'num_comments': 74}

>>> stories[1]

{'title': u'The Wrong Way to Get Noticed by YC', 'poster': u'matt1', 'time': u'1 day', 'score': u'82 points', 'link': u'http://www.mattmazur.com/2008/08/the-wrong-way-to-get-noticed-by-yc/', 'num_comments': 49}

Or we could rank the posters using the highly proprietary PosterRank algorithm (meaning, I just made it up, and its the the sum of the poster's articles' scores).

>>> posters = {}

>>> for story in stories:

... score = getattr(posters,story['poster'],0)

... posters[story['poster']] = score + int(story['score'].split(" ")[0])

...

>>> posters

{u'yangyang42': 38, u'ovi256': 9, u'epi0Bauqu': 32, u'bootload': 11, u'echair': 9, u'matt1': 82, u'iamelgringo': 14, u'prakash': 24, u'gaika': 19, u'ericb': 3, u'pius': 106, u'ml1234': 30, u'markbao': 26, u'nickb': 5, u'pauljonas': 20, u'astrec': 22, u'mqt': 123, u'marvin': 3, u'robg': 8, u'jmorin007': 39, u'Anon84': 2}

>>> p = posters.items()

>>> p

[(u'yangyang42', 38), (u'ovi256', 9), (u'epi0Bauqu', 32), (u'bootload', 11), (u'echair', 9), (u'matt1', 82), (u'iamelgringo', 14), (u'prakash', 24), (u'gaika', 19), (u'ericb', 3), (u'pius', 106), (u'ml1234', 30), (u'markbao', 26), (u'nickb', 5), (u'pauljonas', 20), (u'astrec', 22), (u'mqt', 123), (u'marvin', 3), (u'robg', 8), (u'jmorin007', 39), (u'Anon84', 2)]

>>> p.sort(lambda a,b: cmp(a[1],b[1]))

>>> p

[(u'Anon84', 2), (u'ericb', 3), (u'marvin', 3), (u'nickb', 5), (u'robg', 8), (u'ovi256', 9), (u'echair', 9), (u'bootload', 11), (u'iamelgringo', 14), (u'gaika', 19), (u'pauljonas', 20), (u'astrec', 22), (u'prakash', 24), (u'markbao', 26), (u'ml1234', 30), (u'epi0Bauqu', 32), (u'yangyang42', 38), (u'jmorin007', 39), (u'matt1', 82), (u'pius', 106), (u'mqt', 123)]

>>> p.reverse()

>>> len(p)

21

>>> p

[(u'mqt', 123), (u'pius', 106), (u'matt1', 82), (u'jmorin007', 39), (u'yangyang42', 38), (u'epi0Bauqu', 32), (u'ml1234', 30), (u'markbao', 26), (u'prakash', 24), (u'astrec', 22), (u'pauljonas', 20), (u'gaika', 19), (u'iamelgringo', 14), (u'bootload', 11), (u'echair', 9), (u'ovi256', 9), (u'robg', 8), (u'nickb', 5), (u'marvin', 3), (u'ericb', 3), (u'Anon84', 2)]

Looking at that data, of the thirty stories there are only twenty-one submitters, and mqt has the highest PosterRank score. Certainly you could think of (much) more exciting things to do with the data, but the dictionary format makes it easy to play to your hearts content.

Caching, Caching, Caching

Now let's backtrack a bit and look at how we can be more considerate about fetching pages. Our first page fetching looked like this:

>>> import httplib2

>>> from BeautifulSoup import BeautifulSoup

>>> conn = httplib2.Http(".cache")

>>> page = conn.request(u"http://news.ycombinator.com/","GET")

Lets create a helper function that handles those details for us.

import httplib2

from BeautifulSoup import BeautifulSoup

SCRAPING_CONN = httplib.Http(".cache")

def fetch(url,method="GET"):

return SCRAPING_CONN.request(url,method)

page = fetch("http://news.ycombinator.com/")

That doesn't really improve things, but it opens the door for us to add some caching to our fetches.

import httplib2,time

from BeautifulSoup import BeautifulSoup

SCRAPING_CONN = httplib2.Http(".cache")

SCRAPING_CACHE_FOR = 60 * 15 # cache for 15 minutes

SCRAPING_CACHE = {}

def fetch(url,method="GET"):

key = (url,method)

now = time.time()

if SCRAPING_CACHE.has_key(key):

data,cached_at = SCRAPING_CACHE(key)

if now - cached_at < SCRAPING_CACHE_FOR:

return data

data = SCRAPING_CONN.request(url,method)

SCRAPING_CACHE[key] = (data,now)

return data

# Fetch the page.

page = fetch("http://news.ycombinator.com/")

# Fetch the page again, but use cached copy.

page = fetch("http://news.ycombinator.com/")

Now we'll retrieve each individual page at most once every fifteen minutes, which will help cut down on us stressing the server2.

Staggering Requests

Now, lets say we wanted to fetch the article that each story refers to. Using our new fetch function thats pretty easy to do:

>>> pages = [fetch(x['link']) for x in stories]

Now, in this situation each of the stories will be on a different server, so staggering the request apart won't be terriably important, but lets imagine for a moment that they were all on the same server (for example, if we wanted to fetch the comments page for each story). We can modify the fetch function to be more considerate about its requests:

import httplib2,time

from BeautifulSoup import BeautifulSoup

SCRAPING_CONN = httplib2.Http(".cache")

SCRAPING_CACHE_FOR = 60 * 15 # cache for 15 minutes

SCRAPING_REQUEST_STAGGER = 1.1 # in seconds

SCRAPING_CACHE = {}

def fetch(url,method="GET"):

key = (url,method)

now = time.time()

if SCRAPING_CACHE.has_key(key):

data,cached_at = SCRAPING_CACHE[key]

if now - cached_at < SCRAPING_CACHE_FOR:

return data

time.sleep(SCRAPING_REQUEST_STAGGER)

data = SCRAPING_CONN.request(url,method)

SCRAPING_CACHE[key] = (data,now)

return data

With that modification it'll return cached data as quickly as possible, but will stagger any new requests. This is still a bit rough though, we really want to stagger requests by domain, not to stagger every single request.

import httplib2,time,re

from BeautifulSoup import BeautifulSoup

SCRAPING_CONN = httplib2.Http(".cache")

SCRAPING_DOMAIN_RE = re.compile("\w+:/*(?P<domain>[a-zA-Z0-9.]*)/")

SCRAPING_DOMAINS = {}

SCRAPING_CACHE_FOR = 60 * 15 # cache for 15 minutes

SCRAPING_REQUEST_STAGGER = 1100 # in milliseconds

SCRAPING_CACHE = {}

def fetch(url,method="GET"):

key = (url,method)

now = time.time()

if SCRAPING_CACHE.has_key(key):

data,cached_at = SCRAPING_CACHE[key]

if now - cached_at < SCRAPING_CACHE_FOR:

return data

domain = SCRAPING_DOMAIN_RE.findall(url)[0]

if SCRAPING_DOMAINS.has_key(domain):

last_scraped = SCRAPING_DOMAINS[domain]

elapsed = now - last_scraped

if elapsed < SCRAPING_REQUEST_STAGGER:

wait_period = (SCRAPING_REQUEST_STAGGER - elapsed) / 1000

time.sleep(wait_period)

SCRAPING_DOMAINS[domain] = time.time()

data = SCRAPING_CONN.request(url,method)

SCRAPING_CACHE[key] = (data,now)

return data

Now we have a convenient and compassionate fetch function written. Now we can write the same barbaric code as before:

>>> pages = [fetch(x['link']) for x in stories]

And its no longer going to pound any servers, even if all the stories are located on the same domain. Instead it'll sequentially download the stories, and will respect the 1.1 second pause between requests to the same domain. This could be improved upon in a number of ways (run the downloads in parallel, etc), but we've created a nice foundation of a tool that we can use recklessly without inflicting our recklessness upon others.

Pulling the Code Together

Pulling the code that we've written together we can write a simple and considerate script for displaying the top stories--sorted by number of comments--on HackerNews every five minutes, but that will only fetch the page at most once every fifteen minutes.

import httplib2,time,re

from BeautifulSoup import BeautifulSoup

SCRAPING_CONN = httplib2.Http(".cache")

SCRAPING_DOMAIN_RE = re.compile("\w+:/*(?P<domain>[a-zA-Z0-9.]*)/")

SCRAPING_DOMAINS = {}

SCRAPING_CACHE_FOR = 60 * 15 # cache for 15 minutes

SCRAPING_REQUEST_STAGGER = 1100 # in milliseconds

SCRAPING_CACHE = {}

def fetch(url,method="GET"):

key = (url,method)

now = time.time()

if SCRAPING_CACHE.has_key(key):

data,cached_at = SCRAPING_CACHE[key]

if now - cached_at < SCRAPING_CACHE_FOR:

return data

domain = SCRAPING_DOMAIN_RE.findall(url)[0]

if SCRAPING_DOMAINS.has_key(domain):

last_scraped = SCRAPING_DOMAINS[domain]

elapsed = now - last_scraped

if elapsed < SCRAPING_REQUEST_STAGGER:

wait_period = (SCRAPING_REQUEST_STAGGER - elapsed) / 1000

time.sleep(wait_period)

SCRAPING_DOMAINS[domain] = time.time()

data = SCRAPING_CONN.request(url,method)

SCRAPING_CACHE[key] = (data,now)

return data

def extract_story(s):

d = {}

d['link'] = s[0].findChildren()[0]['href']

d['title'] = s[0].findChildren()[0].string

d['score'] = s[1].findChildren()[0].string

d['poster'] = s[1].findChildren()[1].string

try:

d['num_comments'] = int(s[1].findChildren()[2].string.split(" ")[0])

except ValueError:

d['num_comments'] = 0

d['time'] = " ".join(s[1].contents[-2].strip().split(" ")[:2])

return d

def fetch_stories():

page = fetch(u"http://news.ycombinator.com/")

soup = BeautifulSoup(page[1])

titles = [x for x in soup.findAll('td','title') if x.findChildren()][:-1]

subtexts = soup.findAll('td','subtext')

stories = [extract_story(s) for s in zip(titles,subtexts)]

return stories

while True:

stories = fetch_stories()

stories.sort(lambda a,b: cmp(a['num_comments'],b['num_comments']))

stories.reverse()

for s in stories:

print u"[%s cmnts] %s (%s) by %s, %s ago." % (s['num_comments'],s['title'],s['link'],s['poster'],s['time'])

print u"\n\n\n"

time.sleep(60 * 5)

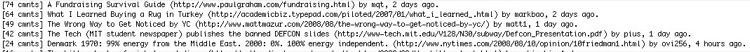

If you enter that code into a Python interpreter, the output will look like this:

You can download a file containing the code here.

Sidenote about Httplib2

You probably noticed early on that we're doing something cache related when we initialize our httplib2.Http object that we're using for requests. The initialization code looks like this:

import httplib2

SCRAPING_CONN = httplib2.Http(".cache")

Whats happening there is that httplib2 understands http caching directives, and will locally cache and reuse the cached data if the server has the appropriate caching directives. Thus there is an additional layer of caching underneath the caching layer that we wrote, and makes this stack even a bit friendler than what we've put together ourselves.

Ending Thoughts

I feel pretty strongly that screen scraping someone's website is like visiting their home: its not that hard to be considerate and polite, and the host will really appreciate it. Hopefully this tutorial has given you some thoughts about other even more effective ways for reducing the load caused by your screen scraping. Let me know if I've made any mistakes, and I'd be glad to hear any comments and thoughts on how you screen scrape responsibly.

This isn't--in any sense--an evil thing to do. The reason people throttle screen scraping is to deal with inconsiderable scrapers who consume inordinate amounts of bandwidth and other finite resources (cpu load, etc).↩

However, keep in mind when you're using local memory to store data that instances of the Python interpreter cannot share that data, so if your web server was running a script like this across several interpreter instances then it would retrieve the page once every fifteen minutes (if you were constantly trying to fetch the data) for each interpreter instance.

In such situations you'd be better off using something like memcached which would allow the instances to share their caches.↩